Picture this: You’ve just published a new blog post, and you’re feeling all kinds of accomplished. You log into your Google Search Console to check how it’s doing and BAM! You see crawl errors. Suddenly, your triumphant mood is replaced by a feeling of panic and dread. Don’t worry, my friend. We’ve all been there. In this post, we’ll go through what crawl errors are and how to fix them using Google Search Console.

What are crawl errors?

Crawl errors are signals from Google that there is a problem with your website. They can be caused by a variety of things, such as broken links, server issues, redirects, or even just by forgetting to update the sitemap. Regardless of what caused them, crawl errors need to be fixed in order for search engine bots (like Google) to properly index and rank your website.

How to find Crawl Errors in Google Search Console

Google Search Console is an invaluable tool for identifying and fixing crawl errors on your website. Here’s how you can use it to do so:

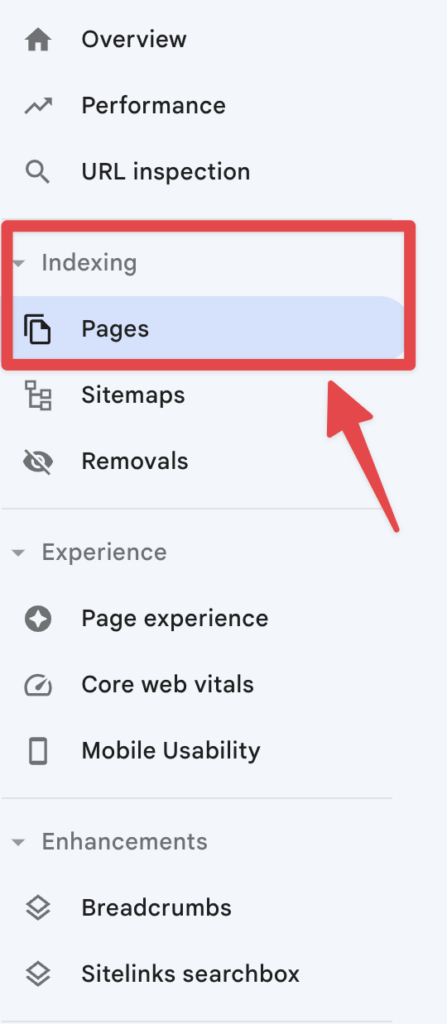

1. Log into your Google Search Console account and select “Pages” from the left navigation menu.

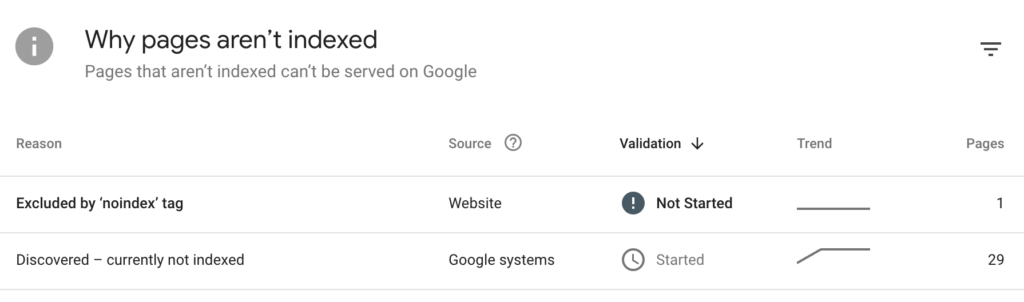

2. Look at “Why pages aren’t indexed” and you will be presented with a list of errors.

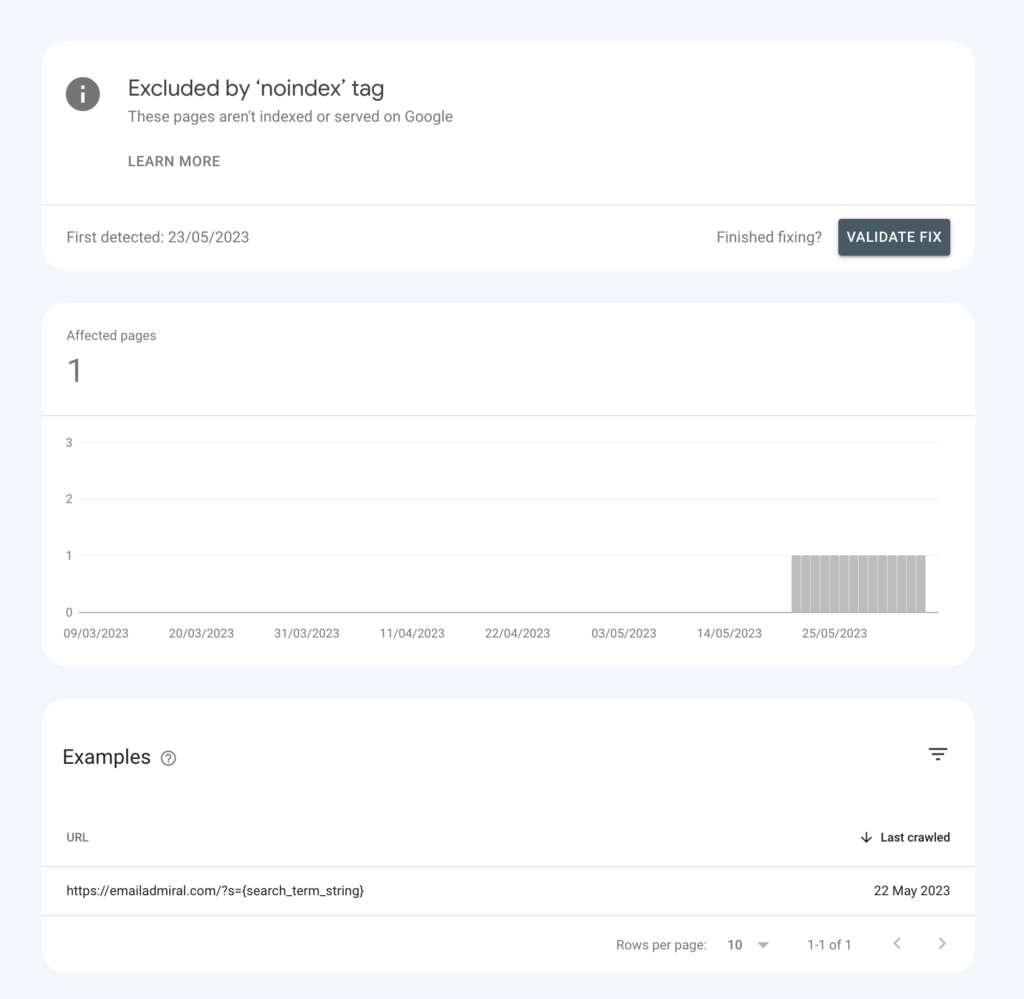

3. Select an error from the list and read its details to get insight into what is causing the problem.

4. Make the necessary changes to your website or server to address the issue (you may need help from a web developer for this).

5. Once you’ve made your changes, go back to Search Console and select the listed error. Click on validate fix. This might take a few hours but Google will let you know if you fixed it!

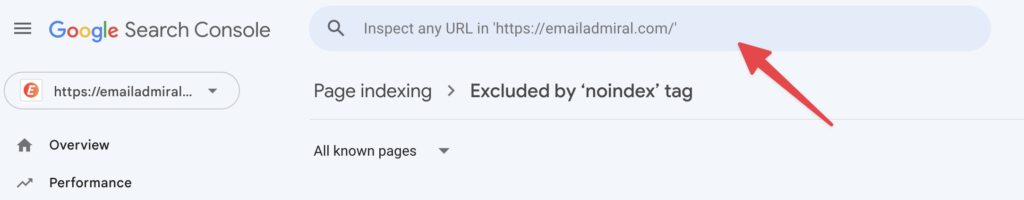

6. If everything looks good, click on “Inspect any URL in ‘yoursitehere’” at the top of Google Search Console and input the URL where you had a crawl error. There you can re-request indexing.

7. Once Google has re-crawled and indexed your website, you should see that your crawl errors have been resolved.

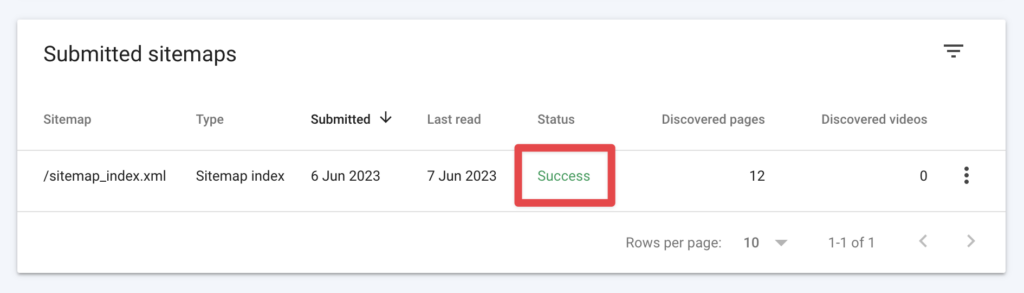

Sitemaps and internal linking also play a role in identifying potential crawl errors. Ensure that your sitemap is up-to-date and submitted to Google Search Console. Regularly review your site’s internal links to identify broken or outdated ones. You can also use tools like Screaming Frog or Ahrefs for additional crawl error analysis.

An extensive list of all the possible crawl errors and how to fix them

Here’s a list of common crawl errors in Google Search Console, along with their possible causes and recommended fixes:

1. DNS Errors: These occur when search engine crawlers cannot resolve your website’s domain name to an IP address.

- Possible Causes: Issues with your DNS provider or incorrect DNS settings.

- Fix: Check your DNS settings, ensure they’re correctly configured, and contact your DNS provider if necessary.

2. Server Connectivity Errors: These happen when search engine crawlers cannot connect to your server.

- Possible Causes: Server downtime, network issues, or server overload.

- Fix: Verify your server’s uptime, check for network problems, and optimize server performance.

3. Robots.txt Fetch Errors: These occur when crawlers cannot access your robots.txt file, which provides instructions on which parts of your site should or shouldn’t be crawled.

- Possible Causes: Missing or incorrectly formatted robots.txt file, server connectivity issues, or temporary unavailability.

- Fix: Ensure your robots.txt file is present and properly formatted, and check for server connectivity issues.

4. 404 (Not Found) Errors: These happen when crawlers try to access a URL that doesn’t exist on your site.

- Possible Causes: Deleted pages, broken internal or external links, or mistyped URLs.

- Fix: Create redirects for permanently moved pages, update broken links, restore deleted pages, or correct mistyped URLs.

5. Soft 404 Errors: These occur when a page returns a “200 OK” status code but should actually return a “404 Not Found” status code because the content is nonexistent or irrelevant.

- Possible Causes: Improper server configuration or incorrect handling of non-existent pages.

- Fix: Update your server configuration to return the correct “404 Not Found” status code for non-existent pages or improve the content on those pages.

6. Crawl Anomaly: This is a catch-all error category for any crawling issue that doesn’t fit into the other categories.

- Possible Causes: Various, depending on the specific issue.

- Fix: Investigate the specific issue in Google Search Console and address it accordingly.

7. Blocked by robots.txt: These errors occur when your robots.txt file is blocking crawlers from accessing certain pages or sections of your site.

- Possible Causes: Intentional or unintentional blocking of pages in the robots.txt file.

- Fix: Review your robots.txt file and update it to allow search engines to crawl the desired pages.

8. Duplicate Content: This issue arises when multiple URLs have identical or very similar content.

- Possible Causes: Dynamic URL generation, session IDs in URLs, or improper use of canonical tags.

- Fix: Implement canonical tags to indicate the preferred version of a page, use “noindex” meta tags for non-important pages, or consolidate similar content into a single page.

9. Redirect Errors: These occur when there are issues with your site’s redirects, such as redirect loops or chains.

- Possible Causes: Incorrectly configured redirects, temporary redirects that should be permanent, or multiple redirects in a sequence.

- Fix: Update your redirects to be properly configured, use permanent (301) redirects where appropriate, and eliminate unnecessary redirect chains or loops.

10. Mobile Usability Issues: These errors indicate that your site has problems with mobile-friendliness, which can affect its ranking on mobile search results.

- Possible Causes: Non-responsive design, small font sizes, or elements too close together for easy tapping on touchscreens.

- Fix: Implement a responsive design, increase font sizes for readability, and ensure adequate spacing between tap targets.

Keep in mind that this list is not exhaustive, but it covers the most common crawl errors you may encounter in Google Search Console. Regularly monitoring and addressing these errors will help improve your site’s SEO performance.

How to prevent Crawl Errors

In addition to fixing the errors, it is also important to take proactive steps to prevent them from occurring in the first place. These include:

- Following search engine guidelines and best practices when creating and managing content on your site.

- Regularly checking broken links, redirects, and other elements of your site’s architecture.

- Using a reliable website monitoring service to quickly identify any issues with your site’s performance.

- Setting up Google Search Console so that you receive notifications about any crawl errors detected by Googlebot.

- Ensuring your hosting environment is reliable and secure, with adequate resources for serving pages quickly and efficiently.

By taking these steps, you can help ensure that your site’s SEO performance is not hindered by crawl errors. Doing regular maintenance and monitoring can help you identify any issues quickly, so you can take action to fix them before they become a bigger problem.

Conclusion: Fixing Crawl Errors in Google Search Console

Crawl errors are common problems on websites, but fortunately, they are fairly easy to diagnose and fix with the right tools and strategies. By actively monitoring and addressing these errors, you can ensure that your website’s SEO performance is not hindered by crawl errors and other technical issues. With regular maintenance, you can keep your website running smoothly and enjoy improved rankings in the search engine results pages!